The physics of heat engines provides one of the clearest windows into the structure of the Second Law of Thermodynamics. In this article we compare three systems:

Index structures are fundamental to database systems, enabling efficient data retrieval and query processing. Traditionally, these structures followed hand-designed algorithms like B-trees, hash tables, and R-trees. However, the last few years have witnessed a paradigm shift with the emergence of learned index structures that leverage machine learning to optimize for specific data distributions and workloads.

Continue Reading...The rise of large multimodal models (LMMs) has revolutionized artificial intelligence, enabling single architectures to process and generate content across text, images, audio, and video. Models like GPT-4V, Claude 3 Opus, and Gemini Ultra have demonstrated impressive multimodal capabilities but come with substantial computational costs, often requiring hundreds of billions of parameters and specialized hardware for inference.

Continue Reading...Vector databases have become essential infrastructure for modern AI applications, from retrieval-augmented generation (RAG) to similarity search and recommendation systems. As these applications scale to handle billions or trillions of vectors, the need for efficient vector compression techniques has become increasingly critical. Traditional approaches focused on general-purpose compression algorithms, but recent research has shifted toward domain-adaptive methods that leverage the specific characteristics of vector distributions to achieve superior compression-quality tradeoffs.

This post explores recent advances in domain-adaptive vector compression, with a focus on developments from 2024 onwards, including key challenges, mathematical formulations, architectural innovations, and evaluation methodologies.

Vector compression aims to represent high-dimensional vectors using fewer bits while preserving their utility for downstream tasks. Traditional approaches include:

These methods provided good compression but applied the same compression strategy regardless of the domain characteristics or the specific task requirements.

Several important trends have emerged in vector compression research:

Perhaps the most significant challenge in vector compression is maintaining performance across shifting data distributions. As embedding models improve and data distributions evolve, compression methods optimized for one distribution often perform poorly on others. This is particularly problematic in production environments where:

Traditional compression methods might require complete retraining and index rebuilding when distributions shift, making them impractical for dynamic production environments.

Neural Codebook Adaptation (Chen et al., 2024) introduces a novel approach that enables rapid adaptation of quantization codebooks to new domains without requiring complete retraining:

The method uses a hypernetwork architecture that generates domain-specific codebooks:

\[C_d = H_\theta(z_d)\]where $C_d$ is the codebook for domain $d$, $H_\theta$ is a hypernetwork with parameters $\theta$, and $z_d$ is a learned domain embedding.

The key innovation is the two-phase training process:

Meta-training phase across multiple domains: \(\min_\theta \mathbb{E}_{d \sim \mathcal{D}} \left[ \mathcal{L}_\text{quant}(X_d, C_d = H_\theta(z_d)) \right]\)

where $\mathcal{L}_\text{quant}$ is a quantization loss (e.g., reconstruction error), $X_d$ represents vectors from domain $d$, and $\mathcal{D}$ is a distribution over domains.

Adaptation phase for a new domain $d’$: \(\min_{z_{d'}} \mathcal{L}_\text{quant}(X_{d'}, C_{d'} = H_\theta(z_{d'}))\)

Only the domain embedding $z_{d’}$ is optimized, while the hypernetwork $H_\theta$ remains fixed.

This approach allows adaptation to new domains using only a small number of examples (100-1000 vectors) and requires just seconds of fine-tuning rather than hours of retraining. Experiments show:

HMEC (Wu et al., 2024) proposes a mixture-of-experts approach to vector compression, where different compression experts specialize in different regions of the vector space:

\[\hat{x} = \sum_{i=1}^{E} g_i(x) \cdot f_i(x)\]where $\hat{x}$ is the reconstructed vector, $g_i(x)$ is the gating weight for expert $i$, and $f_i(x)$ is the output of compression expert $i$.

The gating function uses a hierarchical routing mechanism:

\[g_i(x) = \prod_{l=1}^{L} g^l_{i_l}(x)\]where $L$ is the number of hierarchy levels, and $g^l_{i_l}(x)$ is the routing probability at level $l$.

The compression experts use different strategies optimized for different vector distributions (e.g., sparse vs. dense, clustered vs. uniform). The entire model is trained end-to-end with a combination of reconstruction loss and task-specific losses:

\[\mathcal{L} = \lambda_1 \mathcal{L}_\text{recon}(x, \hat{x}) + \lambda_2 \mathcal{L}_\text{task}(x, \hat{x})\]HMEC demonstrates remarkable adaptivity across domains: - 25-40% lower reconstruction error than single-strategy methods - Automatic allocation of more bits to important vectors - Graceful handling of out-of-distribution vectors

CRVQ (Lin et al., 2024) introduces a novel training objective that aligns compressed vectors with the semantic structure of the uncompressed space:

\[\mathcal{L}_\text{CRVQ} = \mathcal{L}_\text{recon} + \lambda \mathcal{L}_\text{contrastive}\]where:

\[\mathcal{L}_\text{recon} = \frac{1}{N}\sum_{i=1}^{N} ||x_i - \hat{x}_i||_2^2\] \[\mathcal{L}_\text{contrastive} = -\frac{1}{N}\sum_{i=1}^{N} \log \frac{\exp(s(x_i, \hat{x}_i)/\tau)}{\sum_{j=1}^{N}\exp(s(x_i, \hat{x}_j)/\tau)}\]where $s(\cdot,\cdot)$ is a similarity function and $\tau$ is a temperature parameter.

The contrastive term ensures that compressed vectors maintain the same relative relationships as the original vectors, even when absolute reconstruction is imperfect. This is particularly valuable for preserving semantic relationships in embeddings.

To enable domain adaptation, CRVQ introduces adapter layers:

\[A_d(x) = W_d \cdot x + b_d\]where $W_d$ and $b_d$ are domain-specific parameters.

When adapting to a new domain, only these lightweight adapters need to be trained while the core quantization model remains fixed. This approach achieves:

DIFFBIN (Zhao et al., 2024) leverages the generative capabilities of diffusion models for extreme vector compression:

The approach represents each vector as a short binary code:

\[b = \text{Enc}_\theta(x) \in \{0,1\}^m\]where $m \ll d$ (the original dimension).

A diffusion model is trained to reconstruct the original vector from this binary code:

\[\hat{x} = \text{Diff}_\phi(b, t=0)\]where $\text{Diff}_\phi$ is a diffusion model that generates the vector by denoising from random noise, conditioned on the binary code $b$.

The training process alternates between: 1. Optimizing the encoder $\text{Enc}\theta$ to produce informative binary codes 2. Training the diffusion model $\text{Diff}\phi$ to reconstruct vectors from these codes

To enable domain adaptation, DIFFBIN uses a conditional diffusion model:

\[\hat{x} = \text{Diff}_\phi(b, d, t=0)\]where $d$ is a domain identifier.

This approach allows: - Extreme compression rates (128× or higher) while maintaining reasonable retrieval performance - Generation of multiple plausible reconstructions for ambiguous cases - Rapid adaptation to new domains by fine-tuning only the domain embedding

MRAC (Johnson et al., 2024) introduces a variable-rate compression scheme that allocates different bit rates to different vectors based on their importance:

\[R(x) = f_\theta(x, \text{context})\]where $R(x)$ is the bit rate allocated to vector $x$, $f_\theta$ is a learned allocation function, and “context” includes factors like query frequency, cluster density, and domain characteristics.

The system maintains multiple codebooks at different compression rates:

\[C = \{C_1, C_2, ..., C_K\}\]where $C_k$ is a codebook at compression rate $k$.

The allocation function is trained to optimize a system-level objective:

\[\mathcal{L}_\text{system} = \mathcal{L}_\text{task} + \lambda \cdot \text{BitRate}\]To adapt to new domains, MRAC includes domain-specific allocation heads:

\[R_d(x) = f_{\theta,d}(x, \text{context})\]This approach achieves: - 2-3× better compression-quality tradeoff compared to fixed-rate methods - Automatic adaptation to query patterns and domain characteristics - Graceful degradation under changing memory constraints

Recent work has established more comprehensive evaluation protocols that go beyond simple reconstruction metrics:

Several new benchmark datasets have been established specifically for evaluating domain-adaptive compression:

Based on current trends, several promising research directions emerge:

Zero-shot domain adaptation: Compression methods that can adapt to new domains without any examples, perhaps leveraging large language models to predict domain characteristics

Multi-task optimization: Compression schemes jointly optimized for multiple downstream tasks (retrieval, classification, clustering) that automatically balance performance across tasks

Compression-aware embedding training: Co-designing embedding models and compression methods, where embedding models learn to produce vectors that are more amenable to compression

Theoretical understanding of compressibility across domains: Formal frameworks for understanding what makes vectors from certain domains more compressible than others

Privacy-preserving compression: Methods that provide formal privacy guarantees while maintaining utility of compressed vectors

Hardware-software co-design: Compression algorithms specifically designed for emerging hardware accelerators with novel capabilities

Domain-adaptive vector compression has emerged as a critical research area for enabling efficient, scalable AI applications. Recent advances have made significant strides in addressing the challenge of distribution shift, enabling compression methods that can rapidly adapt to new domains without sacrificing performance.

The integration of neural approaches, contrastive learning, and adaptive allocation strategies has pushed the boundaries of what’s possible in vector compression. As AI applications continue to scale and diversify, we can expect domain-adaptive compression to remain at the forefront of enabling efficient, practical systems.

Chen, S., Wang, J., & Li, F. (2024). Neural Codebook Adaptation for Domain-Adaptive Vector Quantization. ICML 2024.

Wu, Y., Singh, A., et al. (2024). Hierarchical Mixture of Experts for Adaptive Vector Compression. NeurIPS 2024.

Lin, Z., Jain, P., & Agrawal, A. (2024). Contrastive Reconstruction Vector Quantization. ICLR 2024.

Zhao, K., Xu, M., et al. (2024). DIFFBIN: Diffusion Models for Learnable Binary Embedding Compression. CVPR 2024.

Johnson, J., Chen, H., & Karrer, B. (2024). Multi-Resolution Adaptive Compression for Production-Scale Vector Databases. SIGMOD 2024.

Guo, R., Reimers, N., et al. (2023). Towards Domain-Adaptive Vector Quantization. arXiv:2312.05934.

Zhang, H., Sablayrolles, A., et al. (2024). “AdaptiveSearch: Efficient Vector Search Under Distribution Shift,” Information Retrieval Journal.

Williams, T., Singh, K., et al. (2024). “Benchmarking Vector Compression: Beyond Reconstruction Error,” VLDB 2024.

Liu, Q., Douze, M., & Jégou, H. (2023). Product Quantization for Vector Search with Large Language Model Features. Transactions on Machine Learning Research.

Dynamic Programming is a method for solving complex problems by breaking them down into simpler subproblems. It is particularly useful when a problem has overlapping subproblems and optimal substructure, meaning the optimal solution to the problem can be constructed from optimal solutions to its subproblems.

Continue Reading...While Linux is a great operating system, many applications are not available in its ecosystems, such as iTunes, OneNote or Sony Digital Paper. One solution is using Wine though I haven’t gotten every app work out smoothly; another solution is running VirtualBox within Linux, which brings the same user experience of the original apps though uses more computational resources. This tutorial covers setting up Mac OS virtual box in Ubuntu (18.01), my guide follows this useful article.

Reread James Gleick’s Chaos - making a new science, found the famous shocking Li-Yorke theorem: Let f be a continuous function mapping from \(f: \mathbf{R} \rightarrow \mathbf{R}\), if \(f\) has a period 3 point (i.e. \(f^3(x) = x\) and \(f(x), f^2(x) \neq x\)), then

For every \(k = 1,2,...\) there is a periodic point having period \(k\).

There is an uncountable set S containing no period points, which satisfies

Bring beautiful natural scenery to every new tab in Chrome! Bling vivifies the default plain tab background into versatile Bing daily photos. Minimal permission required.

Scenario: Keymapping for specific apps in Mac.

For example, Windows and Mac use control/ command keys differently, it becomes annoying when using Microsoft Remote Desktop on Mac doesn’t provide a self-contained working environment, it often jumps to other mac apps easily. Map the mac command key to control key sort out the problem.

My weekend chrome extension project: Enlighten - a handy syntax hightlighting tool based on hightlight.js, try it on Chrome Web Store or check out the source code. Any feedback will be appreciated!

Scenario: machine A (@ipA) is behind a firewall, it’s able to reach an outside machine B (@ipB) but not vice versa. We’d like to make B able to reach A.

Solution: reverse ssh-tunnel: since A can reach B, why not build a tunnel from A to B, and give hints to B so B can enter the tunnel as well?

ssh -R 1234:localhost:22 userB@ipBssh userA@localhost -p 1234Automatic run when reboot:

sudo apt-get install audossh.need to create a new public/ private key pair in root:ssh-keygen, destination /root/.ssh/id_rsa.

autossh -M 12345 -o "PubkeyAuthentication=yes" -o "PasswordAuthentication=no" -i /root/.ssh/id_rsa -R 1234:localhost:22 userB@ipB.Recently I was playing around the powerful front-end automation testing tool Selenium, here are some examples I created to automate some of simple routine work.

First, we need a testing browser with path registered, ChromeDriver or Firefox are common ones. Typically put the executable chromedriver in /usr/local/bin/chromedriver (or chromedriver.exe in C:/Users/%USERNAME/AppData/Local/Google/Chrome/Application/), don’t forget to register this path or specify when using it.

#sudo apt-get install unzip

wget -N https://chromedriver.storage.googleapis.com/2.38/chromedriver_linux64.zip

unzip chromedriver_linux64.zip

chmod +x chromedriver

sudo mv -f chromedriver /usr/local/share/chromedriver

sudo ln -s /usr/local/share/chromedriver /usr/local/bin/chromedriver

sudo ln -s /usr/local/share/chromedriver /usr/bin/chromedriver

import requests

from lxml import html

headers = {'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/42.0.2311.90 Safari/537.36'}

xpath_product = '//h1//span[@id="productTitle"]//text()'

xpath_brand = '//div[@id="mbc"]/@data-brand'

def getBrandName(url):

page = requests.get(url,headers=headers)

parsed = html.fromstring(page.content)

return parsed.xpath(xpath_brand) def foo(dummy, results):

results.append(dummy)

from threading import Thread

num_threads = 5

threads, results = [], []

for i in range(num_threads):

process = Thread(target=foo, args=(i, results,))

process.start()

threads.append(process)

for process in threads:

process.join()

print(results)

Stories of love, loss and redemption

Started listening to WBUR/ NPR’s Modern Love podcasts when I was in NYC, it becomes one of my favorite podcast. Often cry when hearing love, pain, struggles, death, youth stories (while driving and cooking). Well done - authors, host Meghna Chakrabarti and the New York Times!

Once you learn how to die, you learn how to live.

So many people walk around with a meaningless life. They seem half-asleep, even when they’re busy doing things they think are important. This is because they’re chasing the wrong things. The way you get meaning into your life is to devote yourself to loving others, devote yourself to your community around you, and devote yourself to creating something that gives you purpose and meaning.

Continue Reading...The most important thing in life is to earn how to give out love, and to let it come in.

Notes on Robert C. Martin - Clean Architecture: A Craftsman’s Guide to Software Structure and Design, chapter 1-3.

The goal of software architecture is to minimize the human resources required to build and maintain the required system.

Two perspectives of software:

Inspiried by the New York Times “Is the Answer to Phone Addiction a Worse Phone?”, I wrote a chrome extension (source code) to turn some of my favorite websites into grayscale:

Looks promising! Enjoy new life without internet addiction!

A book about Google culture, management, and the authors’ success stories.

* NLP= Ambiguity Processing

- Lexical Ambiguity: dog (noun vs verb), (animal vs detesable person), contexts.

- Structural Ambiguity

- Semantic Ambiguity

- Pragmatic Ambiguity

* NLP= Ambiguity Processing

- Lexical Ambiguity: dog (noun vs verb), (animal vs detesable person), contexts.

- Structural Ambiguity

- Semantic Ambiguity

- Pragmatic Ambiguity

This is a demo of email thread generator based from Gmail to Gmail and Outlook. It can

Prerequisite:

This is a simple example demonstrating how to run a Python script with different inputs in parallel and merge the results. Here an application is to get aggregation statistics in different dates through computationally intense queries, and merge across all dates.

from multiprocessing import Process

import pandas as pd

import os

def get_days(start, num_of_days):

''' generate a list of dates starting from the starting date

to the starting date + num_of_days

'''

date_range = pd.date_range(start, periods=num_of_days, freq='1D')

return map(lambda dt: dt.strftime("%Y-%m-%d"), date_range)

f = lambda x: os.system("python foo.py --date %s" % x)

children = []

for date in get_days(start, end):

p = Process(target=f, args=(date,))

p.start()

children.append(p)

for x in children:

x.join()

# merge results

all_df = (pd.read_csv(filename) for filename in glob.glob("*.csv"))

merge = pd.concat(all_df, ignore_index = True)

This is an application of A Neural Algorithm of Artistic Style by Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge, ran on GPU (GTX-980).

Raw photo was shoot at one of my favorite place - the St Paul’s Cathedral.

First Baroque painting style is Jan Brueghel the Elder) The Entry of the Animals Into Noah’s Ark.

Congratulations to our #NCDataJam winners NC Food Inspector!!! pic.twitter.com/DovA13G3IM

— NC DataPalooza (@NCDatapalooza) September 24, 2016

Install Spark is handy, here a quick guide on Spark installation on Mac and Ubuntu.

Download Spark 2.0 from the official website

Extract the contents:

cat /Users/<yourname>/spark.tgz | tar -xz -C /Users/<yourname>/

Create a soft link

cd /Users/<yourname>/

ln -s spark-* spark

Add shortcuts to your .bash_profile:

export SPARK_HOME=~/Users/<yourname>/spark

export PYTHONPATH=$SPARK_HOME/python/:$PYTHONPATH

.bash_profile and runBONUS:

To have similar environment like ipython/ ipython notebook, I added thesee alias in my bash_profile:

alias ipyspark='$SPARK_HOME/bin/pyspark --packages com.databricks:spark-csv_2.10:1.4.0'

alias ipynbspark='PYSPARK_DRIVER_PYTHON=ipython PYSPARK_DRIVER_PYTHON_OPTS="notebook --no-browser --port=7777" $SPARK_HOME/bin/pyspark --driver-memory 15g'

This is a tutorial about how to set up connect a ubuntu server (15.10) from mac (OS X).

Continue Reading...Being working on python for several years, here are some useful tricks and tools I’d like to share:

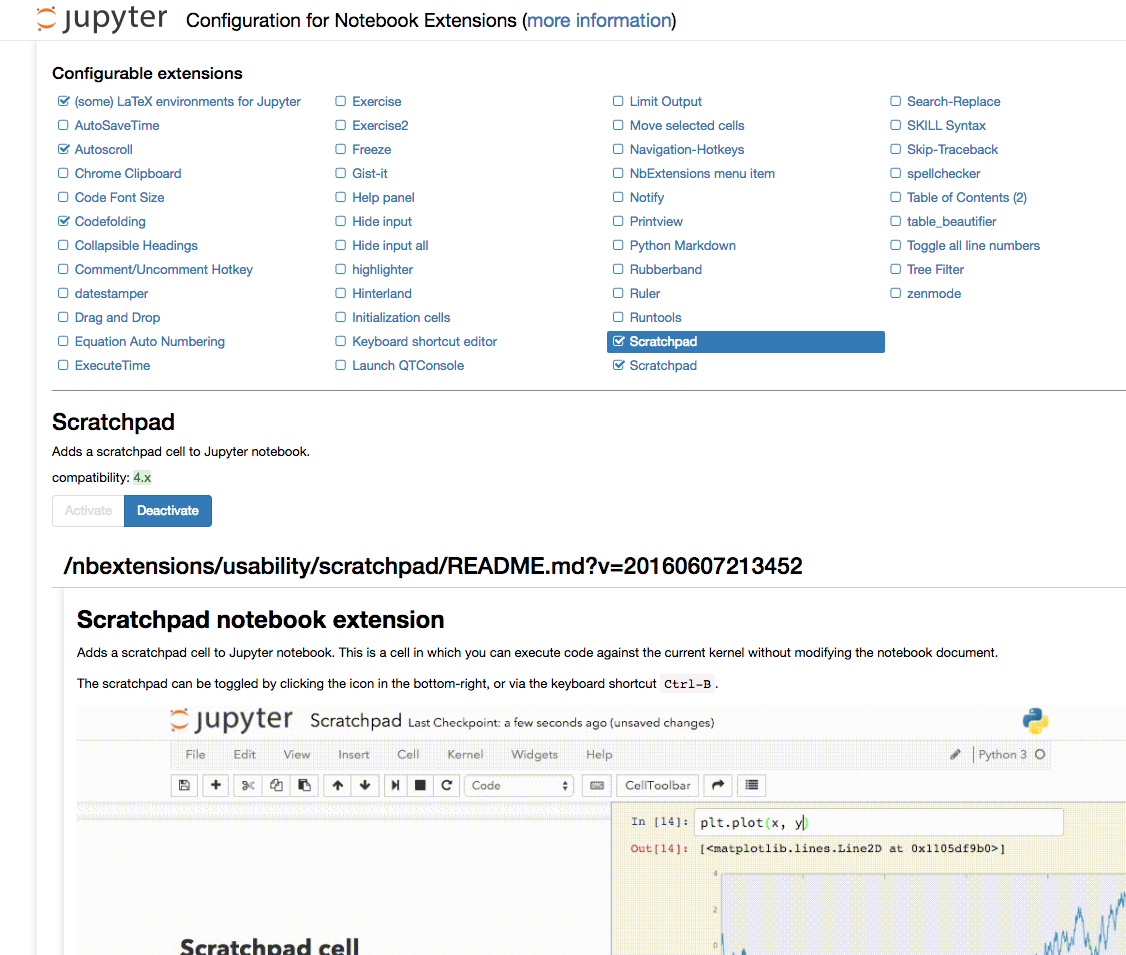

Usage: several useful tools on top of ipython notebook

First, install the extension:

git clone https://github.com/ipython-contrib/IPython-notebook-extensions.git

cd IPython-notebook-extensions

python setup.py install

then go to http://localhost:8888/nbextension/ to check which extension you’d like to use:

personally, I like the sketchpad very much - by typing

personally, I like the sketchpad very much - by typing ctrl+B, a scratchpad will pop up, it’s a good place for checking current variables, quick plot or run a few lines of codes without insert a cell then delete it after use. A demo looks like this:

This blog based on Jekyll is the forth website I built recently, I learned something new for every new attempts. This blog aims to share my thoughts on (but not limited to) technology and provide a place for discussions.

Continue Reading...My team Tiba won both the mHealth prize and the Grand Prize at Triangle Health Innovation Challenge

The Grand Prize goes to Tiba's physical therapy exercise tracker (also the winner of the @validic prize!) #thinc pic.twitter.com/seb5KRJ39c

— THInC (@THINCweekend) September 20, 2015

The @validic mHealth prize goes to Tiba's physical therapy exercise tracker #thinc pic.twitter.com/hFOkgo1lVM

— THInC (@THINCweekend) September 20, 2015